Value of tests

Testing is an important part of writing an application. There are many decisions to make about what, how and when to test. It often helps me to think of the costs and values of (potential) tests to reason or talk about them.

This text will explain what these costs and values are and some of the guidelines I derived from this.

Different test types

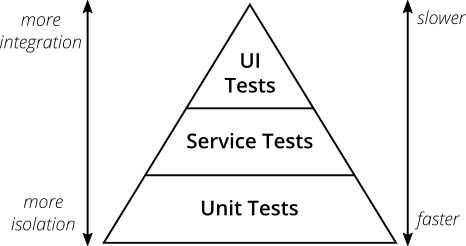

There are many types of tests. Often symbolized in pyramids like this:

Depending on your setup, you might have a different amount of layers and call them differently. Note that here I’m only considering functional tests. You might want to also have some performance/security/other tests.

Lately I’ve been working on small internal kubernetes pods, then it looks like this:

-

Manual tests

-

Environment tests

-

System tests

-

Integration tests

-

Unit tests

-

(Compiler)

With these meanings:

-

“Unit tests” - one class in class-based languages

-

“Integration tests” - multiple classes, often with embedded database/IO

-

“System tests” - testing your pod from the outside

-

“Environment tests” - testing multiple pods together

-

“Manual tests” - tests that you have not automated (yet)

Note that Environment, System, Integration tests vary in meaning depending on who you talk to. It does help to have consistent naming within your company.

If you have a category “End-to-end tests” and it’s not clear to everyone what they are, consider renaming it to something that describes what those ends are. Is it a mocked, embedded, sandbox or live API?

Value and costs

These things give tests value:

-

They guard against bugs. The main value of tests is a guarantee that your app behaves in the way you expect it to. And that you can be confident in deploying it to production.

-

They help write code. You need to specify your inputs and outputs

-

They shorten the write code - test cycle. Running a unit test goes way faster than a restart of your complete application and doing a manual test.

-

They help with refactoring. If you have a good set of tests, you can refactor your code without fear.

-

Documentation of functionality. Tests can have names that describe user expectations

-

Documentation of the code. Tests are small pieces of code that explain how to call your code under test. And it shows the results.

Tests also have costs:

-

Time to write them

-

Time to maintain them. If you change the interface of a function, then tests of that function will break. If you change a class that’s being mocked elsewhere, that mock will break.

-

They can impede refactoring. You might be reluctant to change code if it breaks a lot of tests.

-

They add build time.

Maximize value

Be sure to actually use all value giving properties.

Write tests before, or together with the code, so you take advantage of the quick write-compile-test round.

Make sure you have enough tests so you can deploy without running extra manual tests.

Use the plaintext descriptions of your testcases to describe the functionality. And possibly why the functionality is like that.

Write readable test code.

It helps to clearly separate the setup, running and checking of the results. Some testing frameworks enforce that you label them with given/when/then. Otherwise you can use comments, or simply separate the parts with an empty line.

Minimize costs

An interesting observation here is that tests can both help or hinder refactoring. It helps here to structure the code well, using the principles of loose coupling and high cohesion. You can split out longer running tests to not run them on every build. Maybe only run them on master, or daily?

Avoid tautological tests (https://blog.thecodewhisperer.com/permalink/the-curious-case-of-tautological-tdd)

Other tips

Test functionality, not code. You want to focus on the output of your functions, not so much on how it got there, because you might want to refactor that later on.

Use integration or system tests to test if your classes are connected well. You cannot test this with a mock.

Avoid mocking in general. Mocks often have high cost and low value. (https://www.thoughtworks.com/insights/blog/mockists-are-dead-long-live-classicists)

How many tests do I need

These costs and values are pretty abstract. You can’t do a clear calculation to determine if a test is worth it or if you need to add another one. In that regard programming is more art than science; You’ll need to follow your gut (experience). Code reviews can help. You might think something is evident and doesn’t need a test because you’ve worked on it a lot, but a peer might have a fresh perspective.

Who decides how many tests to make:

The application: A medical application where people might die needs more rigorous testing than a game that’s played only by a few people

The programmer: We as programmers are responsible for a well running application. Management may have some say in deciding what kind of application we’re making, but ultimately it’s up to us to make the application reliable enough. It helps here if programmers also run standby shifts.

“This feature is very important and needs to be deployed as quickly as possible” is not a good reason for skipping tests, while “This feature is experimental, we want to try it with real users to see if they like it and it’s ok if it breaks” is.

Closing statements

Thinking about costs and values can help you think and talk about test improvements.

This is better and more nuanced than absolute statements like:

-

“Mocks are always bad” They’re not. Sometimes even mocks are useful. They’re just often misused.

-

“We need 90% code coverage” Code coverage can give a quick idea about the general test/code ratio in parts of the codebase, but not more than that. (https://martinfowler.com/bliki/TestCoverage.html)

I probably forgot some value or cost. Please tell me if you think of another.