Machine Learning Systems require paradigm shift in Software Engineering

Nowadays we see more and more intelligence added to our applications. This can be in any form but mostly we see it in the form of predictions or some form of recognition. This added value comes at a price and requires a new way of working within our established Software Engineering practice.

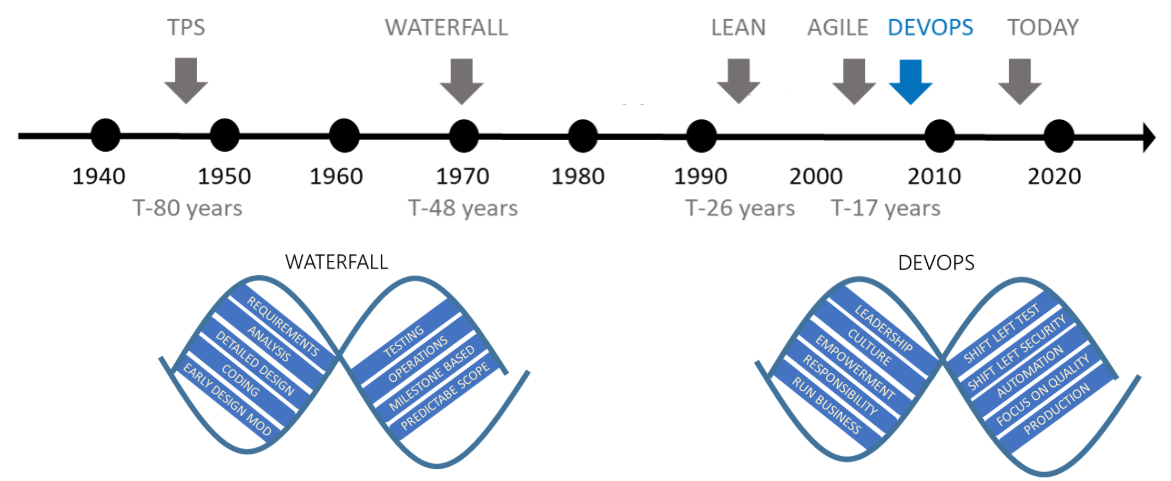

Software Engineering has evolved over time

Looking at the art of Software Engineering we see that we evolved from working with a big upfront design to a more agile way of working. Where DevOps not only helps the business with getting to market quicker it also gives teams the power and trust they need to be successful.

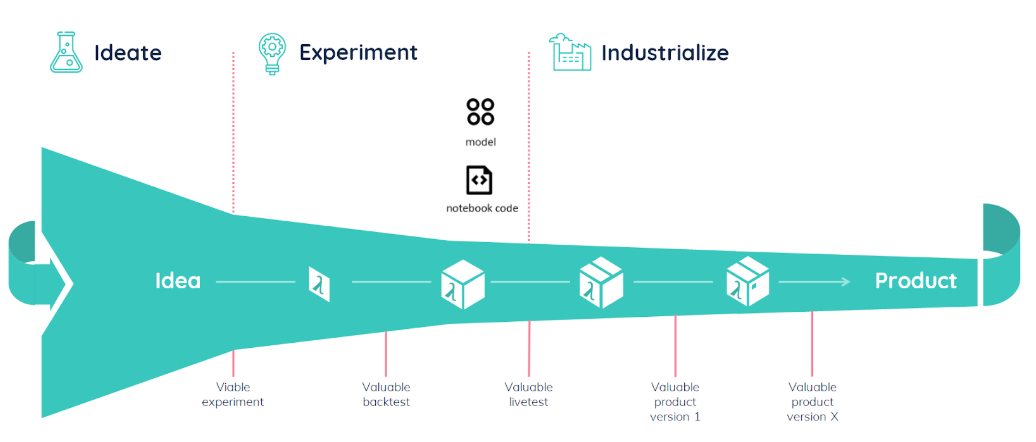

Whereas working with applications that use Machine Learning a.k.a. Machine Learning systems the art of agile development is not as well established. There is a lot of research and experimental work done before a ML model will be promoted to the next phase, not to mention promoted to production. This process can be described like a funnel.

Starting with an idea or business case that needs to be well described and measurable. This is followed by multiple iterations of creating the most optimized model. This is usually done by the Data Scientist on his laptop or development area. Finally, the model needs to be productized or industrialized. This final step is mostly done by the Data Engineer and challenges like performance or availability are dealt with.

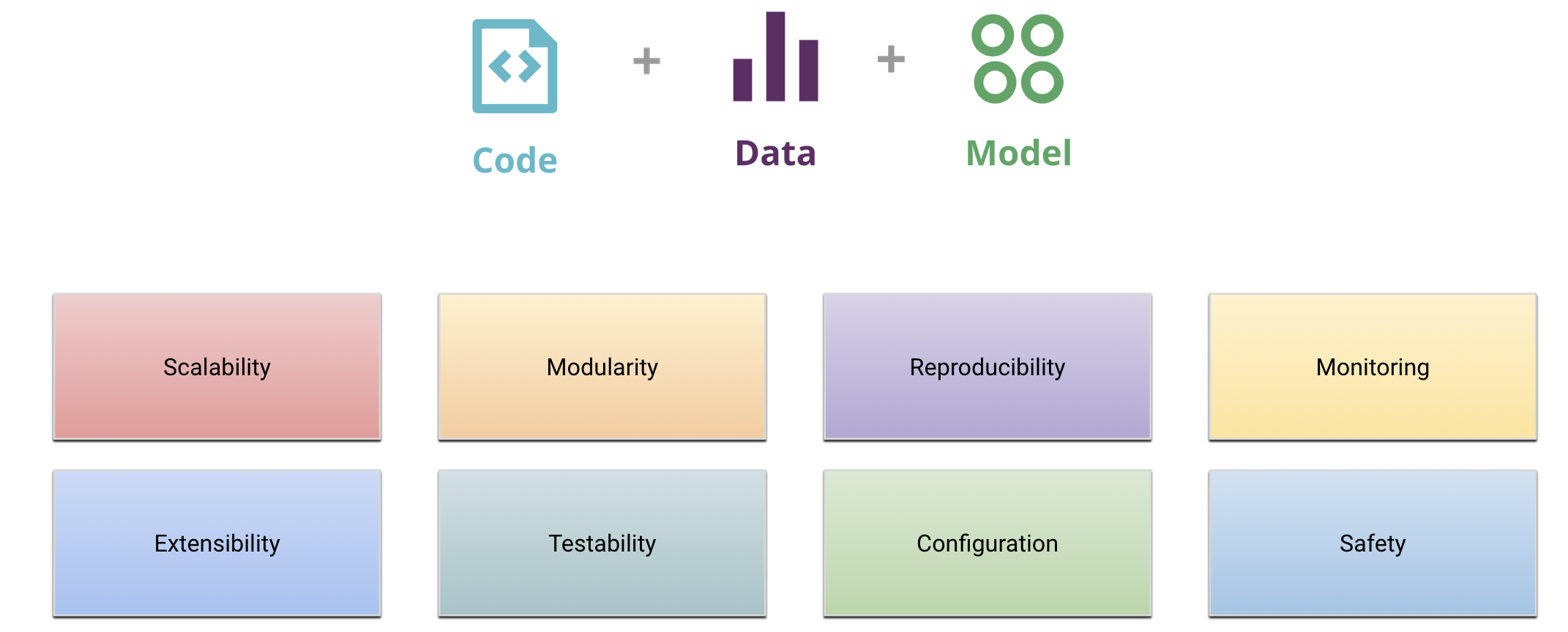

Machine Learning Systems and NFR’s

Over the years Non Functional Requirements have been a way to describe the behaviour of an application in terms of the -ilities. Most people think of these concepts in terms of code. With the advent of ML, those concepts are fundamentally subject to data. If you just think about code and not think about data you only think about half of the picture.

Let’s look at a few of the those NFR’s from a ML perspective.

Scalability

As your business grows, so might your data. Ideally you want to make software that could operate on a laptop to experiment quickly, but it could also be scaled to operate on a large network of machines with lots of processors.

Extensibility

Libraries can be used out-of-the-box, but you often want to customize parts of it. This is similar to ML. When you fit data to your ML model, you need to do several data transformations. You want the flexibility of editing those transformations.

Modularity

Importance of nice API’s and use libraries. Ideally, I would be able to reuse components of a trained model for pictures and put it into another model that predicts a kind of chairs. How does it apply to data artifacts? Data artifacts can be statistics of your data, or something else. Those operate in a continuous fashion. Sometimes you have to merge artifacts together in order to operate your model in real-time.

Testability

Unit tests, configuration tests, regression tests, are all about code. Equivalent of unit test for data is writing expectations of the type of the data, the values, the distributions. You don’t have strong contracts like we have before due to ML. We need expectations of the data.

Moving to Iterative Machine Learning with ML-OPS

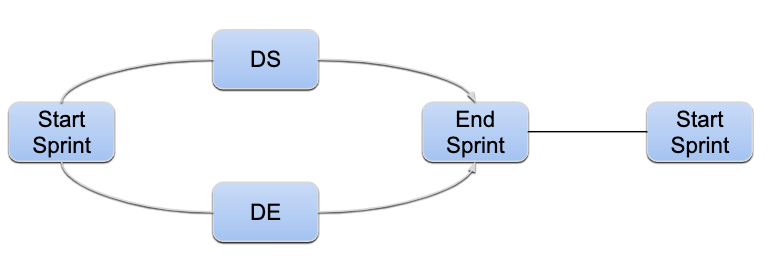

Data Scientists often start on their laptops, because that is the environment they know best. They work and experiment on their solutions until they get what the business needs. Today, too often, those solutions are thrown over the wall and violate a lot of good practices when it comes to Software Engineering. A possible solution for this is an iterative way of development. Initially splitting up Data Science and Data Engineering during the sprint. This gives Data Science the focus it needs to work on their model(s) but also gives Data Engineering the possibility to start building their pipeline. At the end of the sprint this all comes together creating a functional solution that can be promoted to production. A additional positive side effect is that iterations of the model will be promoted to production where it will be 'tested' in the real world.

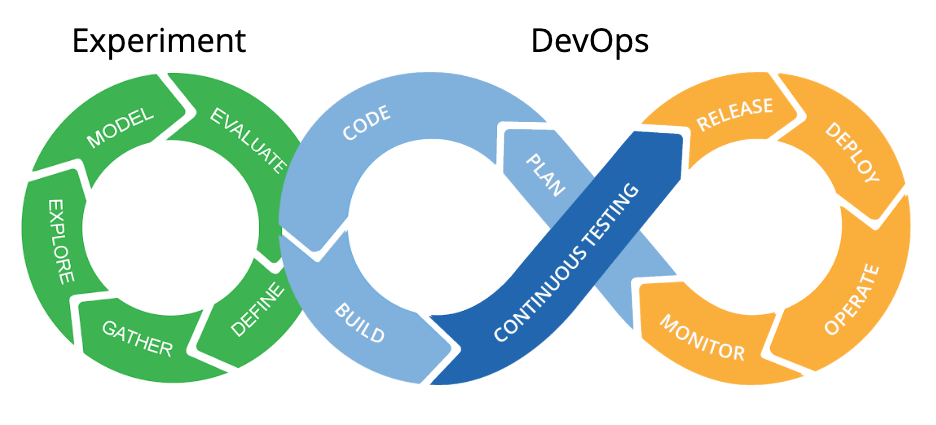

Building Machine Learning Systems comes down to integrating the ML development cycle within the standard DevOps development cycle that we are so familiar with from a Software Engineering perspective. Combining those two creates ML-OPS or AI-OPS.